Enportal/5.5/admin/enPortal installation/installation/clustering and failover: Difference between revisions

imported>Jason.nicholls m (1 revision) |

imported>Jason.nicholls |

||

| (6 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

[[Category:enPortal 5.5]] | [[Category:enPortal 5.5]] | ||

{{DISPLAYTITLE:Clustering & Failover}} | {{DISPLAYTITLE:Clustering & Failover}} | ||

== | == Overview == | ||

enPortal is implemented using a highly scalable web application architecture. As a Java application running inside an Apache Tomcat server, enPortal is able to make use of multi-core and multi-processor systems with large amounts of RAM on 64-bit operating systems. In addition to scaling vertically on a single system, enPortal supports horizontal scaling to handle even greater loads and/or to provide for high availability environments through the use of a shared configuration database. | |||

enPortal can be used in the following configurations: | |||

#<b>Load Balanced</b>: Two or more nodes are fully operational at all times. The load balancer directs traffic to nodes based on standard load balancing techniques such as round-robin, fewest sessions, smallest load, etc... If a server is detected as down it is removed from the active pool. | |||

#<b>Failover</b>: A two-node configuration with both nodes running but all traffic is routed to the primary node unless it is detected to be down. At this point the load balancer re-directs traffic to the secondary node. | |||

#<b>Cold Standby</b>: A two-node configuration where the secondary node is offline in normal operation. If the primary node is detected to be down the secondary node is brought online and traffic re-directed. | |||

In cases where high-availability is required then regardless of the load a cluster configuration is recommended. In cases where load is a concern refer to the [[enportal/5.5/admin/enPortal_installation/performance_and_sizing|Performance Tuning & Sizing]] documentation for more information. | |||

== Architecture & Licensing == | |||

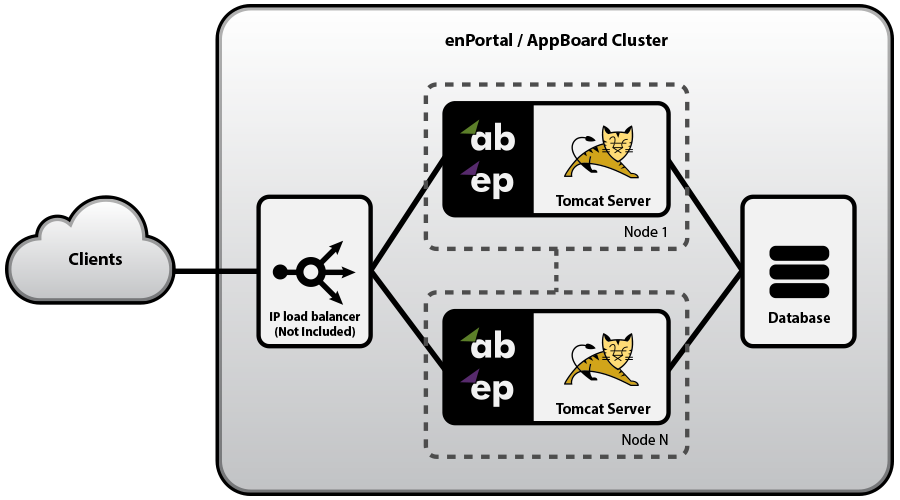

[[File:Simple_Cluster.png|frameless|756px|center|Two Node Cluster Architecture Diagram]] | |||

Whether running a load-balanced, failover, or cold-standby configuration the following components are required: | |||

* enPortal installation per node, this requires a separate license for each node. | |||

* External (shared) configuration database. This database is not provided by Edge and is recommended to reside on a different host to the enPortal servers. In high availability environments the database itself should also highly available. See the [[enportal/5.5/overview/system_requirements|System Requirements]] for supported external configuration databases. | |||

* Load Balancer. This component is not provided by Edge but is required in cluster configurations. | |||

== Cluster Configuration == | |||

The overall cluster configuration is made up of separate parts that follow the cluster architecture: | |||

# Load Balancer configuration | |||

# Shared enPortal configuration: via an external shared configuration database. | |||

# per-node enPortal configuration and filesystem assets. | |||

Also consider that establishing a new cluster and maintaining the cluster may have different approaches as outlined below. | |||

=== Shared Configuration Database === | |||

In simple single-server enPortal configurations it is recommended to use the built-in, in-memory, H2 configuration database. However, in cluster configurations the configuration needs to be shared and kept in sync across two or more nodes so an external configuration database is required. Setting up an external database for redundancy operation has two main steps: | |||

# Configure enPortal to use an external database (versus the build-in H2). This process is documented in isolation on the [[enportal/5.5/admin/configuration_database|Configuration Database]] page. | |||

# Configure enPortal to operate in redundancy mode. | |||

The | {{Note|The above points out the main steps in isolation, please refer to the ''Establishing a Cluster'' for more details.}} | ||

=== Per-Node Configuration & Assets === | |||

While the shared configuration database takes care of enPortal content and provisioning information, there is other configuration and filesystem assets that need to be maintained per node: | |||

* license file | |||

* configuration database connection details | |||

* all other filesystem assets such as login pages, images for look and feel, enPortal PIM files, and other miscellaneous pieces that have been built into the solution such as custom JSPs, CGIs, HTML/CSS/JS, etc... | |||

The recommended approach, which also serves to ensure full-backups are made of the system, is to configure the [[enportal/5.5/admin/enPortal_installation/backup_and_recovery#Customizing_the_Export|Backup export list]] to include all filesystem assets. Then when establishing, or updating a cluster, the archive can be used to maintain the filesystem components. Also, other custom approaches may also be suitable such as filesystem synchronization tools. | |||

The | The license and database configuration will need to be handled when first establishing the cluster but will not need to be changed after that point. | ||

=== Load Balancer === | |||

The Load Balancer can distribute sessions to one or more enPortal nodes using any standard load balancing algorithm (e.g. Round-Robin, smallest load, fewest sessions, etc.). The only requirement is that the '''session affinity is maintained''' such that a single user is always routed to the same enPortal node during the full duration of the session. | |||

The | The two session cookies used by enPortal are <tt>JSESSIONID</tt> and <tt>enPortal_sessionid</tt>. When configuring the Load Balancer for session affinity, it is recommended to use '''<tt>enPortal_sessionid</tt>''' to avoid any conflicts with other applications that may also have a JSESSIONID cookie. | ||

The | The following URL can be used by the load balancer as a means of testing enPortal availability: | ||

<tt>http://''server:port''/enportal/check.jsp</tt> | |||

This script returns a HTTP status code 200 (success) if all components of enPortal are running properly, otherwise it returns a 500 (internal error) if there is an issue. And in the case the enPortal server isn't running, then of course there will be no response. | |||

== | === Virtualized Environments === | ||

Whether running on the bare metal or within virtualized environments the clustering configuration remains the same. | |||

Some virtualization environments may offer their own layer of fault tolerance although this is usually targeted at reducing/eliminating the impact of hardware failure - e.g. VMware Fault Tolerance to transparently failover a guest from a failed physical host to a different physical host such that everything continues un-interrupted. This type of system is useful on it's own but may not be aware of application-level failures that can also occur. | |||

== Establishing a Cluster == | |||

The following process can be used to establish a cluster environment. If you're skipped straight here, please go back and read over the previous sections to understand the overall architecture and configuration components. | |||

The following process assumes an existing environment, although a clean install environment can be used too. | |||

The | |||

=== Initial Preparation === | |||

# Setup an external database for the shared configuration database, you will need the access details. Do not actually configure enPortal to use the external database at this stage. | |||

# Set enPortal to operate in redundancy mode. This is enabled using the following setting in <tt>[INSTALL_HOME]/server/webapps/enportal/WEB-INF/config/custom.properties</tt>: | |||

#: <tt>hosts.redundant=true</tt> | |||

# Create a full backup archive of your existing system. Ensure this backup has been configured to include all custom filesystem assets. Refer to the [[enportal/5.5/admin/enPortal_installation/backup_and_recovery#Customizing_the_Export|Backup & Recovery]] page for more information. It's recommended this archive also include all required JDBC database drivers, including the JDBC driver required for the external configuration database. | |||

# Have two or more systems ready to be configured for clustering. Note it's possible to also use the existing system without having to re-install. | |||

# Have the enPortal turnkey distribution and license files for each node. | |||

{{Note|If re-establishing a cluster using the existing external database then '''all cluster nodes must be shutdown''' before proceeding.}} | |||

=== Setup Process === | |||

The following applies to the '''primary node'''. Even in a purely load-balanced configuration pick one of the nodes as the primary for purposes of establishing the cluster: | |||

[[ | # If converting the existing deployment into a cluster deployment, first shutdown enPortal. | ||

# Otherwise, deploy the enPortal turnkey and ensure a valid environment. See the [[enportal/5.5/admin/enPortal_installation/installation|Installation]] documentation for more info. | |||

# Configure enPortal to use an external configuration database. Follow the instructions to use <tt>dbsetup</tt> on the [[enportal/5.5/admin/configuration_database|Configuration Database]] page, but '''do not''' restore any archives or perform a dbreset. | |||

# Load the complete backup archive created in the initial preparation: | |||

#: <tt>portal '''Apply''' -jar ''archive.jar''</tt> | |||

# Install the license file if not already - remember this is node specific. | |||

# Start enPortal. At this point you should have a working cluster of one node! | |||

# Verify in the enPortal <tt>catalina.out</tt> log file the following lines: | |||

#: Establishing a connection to the external configuration database: | |||

#: <tt>Connecting to the ''DB_TYPE'' database at ''jdbc:mysql://HOST:PORT/DB_NAME''...Connected.</tt> | |||

#: Redundancy mode is enabled: | |||

#: <tt>''date@timestamp'' Redundancy support enabled</tt> | |||

# Also verify you can log into this instance of enPortal and all content is as expected. | |||

The following applies to all '''other nodes''' in the cluster: | |||

# | # Deploy the enPortal turnkey and ensure a valid environment. See the [[enportal/5.5/admin/enPortal_installation/installation|Installation]] documentation for more info. | ||

# Load the complete backup archive created in the initial preparation. Note this is '''different''' than for the primary node: | |||

#: <tt>portal '''FilesImport -skiplist apply''' -jar ''archive.jar''</tt> | |||

# Ensure database configuration is '''not''' loaded on enPortal startup by renaming the following files by adding <tt>.disabled</tt> to their filenames: | |||

#* <tt>[INSTALL_HOME]/server/webapps/enportal/WEB-INF/xmlroot/server/load_*.txt</tt> | |||

# Configure enPortal to use an external configuration database. Follow the instructions to use <tt>dbsetup</tt> on the [[enportal/5.5/admin/configuration_database|Configuration Database]] page, but '''do not''' load any archives or perform a dbreset. | |||

# Install the license file if not already - remember this is node specific. | |||

# Start enPortal. At this point you should now have a cluster of two or more nodes! | |||

# | # Verify this instance the same as the primary instance. | ||

# | |||

# | |||

# | |||

## | |||

Additional verification of the whole cluster can be performed by logging into the separate nodes from different locations and viewing the [[enportal/5.5/admin/system_administration/manage_sessions|Session Management]] system administration page and verifying that all sessions are visible regardless of the node logged into. | |||

== Maintaining a Cluster == | |||

== | === Product Updates === | ||

For full product updates a process similar to establishing a new cluster is required. A full archive should be performed. This should be tested in isolation with the new build to ensure there are no upgrade issues. If changes need to be made due to the upgrade then produce a new full archive on the new version of the product for use when establishing the cluster. As the entire cluster will need to be taken down, and re-established, this process will require some scheduled downtime to complete. | |||

In high-availability environments if scheduled downtime is not possible then another approach would be to take down one node, re-establish a new cluster with a different external configuration database, then failover all clients to the new node. Other nodes can then be re-established pointing to the new configuration database, and then update the load balancer configuration to bring all nods back online. | |||

For patch updates these are usually overlaid onto an existing install and do not modify configuration. This can be done in-place and typically just require the enPortal server to be restarted. | |||

=== Content/Configuration Updates === | |||

Content and provisioning changes made directly on the cluster are immediately available to all cluster nodes and nothing further needs to be done. | |||

For content and provisioning changes made in a separate development or staging environment then a full archive should be made and then deployed to production. This would essentially require a re-establishment of the cluster with the shortcut that enPortal is already installed, licensed, and the database configuration is in place. | |||

Latest revision as of 18:10, 17 November 2014

Overview

enPortal is implemented using a highly scalable web application architecture. As a Java application running inside an Apache Tomcat server, enPortal is able to make use of multi-core and multi-processor systems with large amounts of RAM on 64-bit operating systems. In addition to scaling vertically on a single system, enPortal supports horizontal scaling to handle even greater loads and/or to provide for high availability environments through the use of a shared configuration database. enPortal can be used in the following configurations:

- Load Balanced: Two or more nodes are fully operational at all times. The load balancer directs traffic to nodes based on standard load balancing techniques such as round-robin, fewest sessions, smallest load, etc... If a server is detected as down it is removed from the active pool.

- Failover: A two-node configuration with both nodes running but all traffic is routed to the primary node unless it is detected to be down. At this point the load balancer re-directs traffic to the secondary node.

- Cold Standby: A two-node configuration where the secondary node is offline in normal operation. If the primary node is detected to be down the secondary node is brought online and traffic re-directed.

In cases where high-availability is required then regardless of the load a cluster configuration is recommended. In cases where load is a concern refer to the Performance Tuning & Sizing documentation for more information.

Architecture & Licensing

Whether running a load-balanced, failover, or cold-standby configuration the following components are required:

- enPortal installation per node, this requires a separate license for each node.

- External (shared) configuration database. This database is not provided by Edge and is recommended to reside on a different host to the enPortal servers. In high availability environments the database itself should also highly available. See the System Requirements for supported external configuration databases.

- Load Balancer. This component is not provided by Edge but is required in cluster configurations.

Cluster Configuration

The overall cluster configuration is made up of separate parts that follow the cluster architecture:

- Load Balancer configuration

- Shared enPortal configuration: via an external shared configuration database.

- per-node enPortal configuration and filesystem assets.

Also consider that establishing a new cluster and maintaining the cluster may have different approaches as outlined below.

In simple single-server enPortal configurations it is recommended to use the built-in, in-memory, H2 configuration database. However, in cluster configurations the configuration needs to be shared and kept in sync across two or more nodes so an external configuration database is required. Setting up an external database for redundancy operation has two main steps:

- Configure enPortal to use an external database (versus the build-in H2). This process is documented in isolation on the Configuration Database page.

- Configure enPortal to operate in redundancy mode.

Per-Node Configuration & Assets

While the shared configuration database takes care of enPortal content and provisioning information, there is other configuration and filesystem assets that need to be maintained per node:

- license file

- configuration database connection details

- all other filesystem assets such as login pages, images for look and feel, enPortal PIM files, and other miscellaneous pieces that have been built into the solution such as custom JSPs, CGIs, HTML/CSS/JS, etc...

The recommended approach, which also serves to ensure full-backups are made of the system, is to configure the Backup export list to include all filesystem assets. Then when establishing, or updating a cluster, the archive can be used to maintain the filesystem components. Also, other custom approaches may also be suitable such as filesystem synchronization tools.

The license and database configuration will need to be handled when first establishing the cluster but will not need to be changed after that point.

Load Balancer

The Load Balancer can distribute sessions to one or more enPortal nodes using any standard load balancing algorithm (e.g. Round-Robin, smallest load, fewest sessions, etc.). The only requirement is that the session affinity is maintained such that a single user is always routed to the same enPortal node during the full duration of the session.

The two session cookies used by enPortal are JSESSIONID and enPortal_sessionid. When configuring the Load Balancer for session affinity, it is recommended to use enPortal_sessionid to avoid any conflicts with other applications that may also have a JSESSIONID cookie.

The following URL can be used by the load balancer as a means of testing enPortal availability:

http://server:port/enportal/check.jsp

This script returns a HTTP status code 200 (success) if all components of enPortal are running properly, otherwise it returns a 500 (internal error) if there is an issue. And in the case the enPortal server isn't running, then of course there will be no response.

Virtualized Environments

Whether running on the bare metal or within virtualized environments the clustering configuration remains the same.

Some virtualization environments may offer their own layer of fault tolerance although this is usually targeted at reducing/eliminating the impact of hardware failure - e.g. VMware Fault Tolerance to transparently failover a guest from a failed physical host to a different physical host such that everything continues un-interrupted. This type of system is useful on it's own but may not be aware of application-level failures that can also occur.

Establishing a Cluster

The following process can be used to establish a cluster environment. If you're skipped straight here, please go back and read over the previous sections to understand the overall architecture and configuration components.

The following process assumes an existing environment, although a clean install environment can be used too.

Initial Preparation

- Setup an external database for the shared configuration database, you will need the access details. Do not actually configure enPortal to use the external database at this stage.

- Set enPortal to operate in redundancy mode. This is enabled using the following setting in [INSTALL_HOME]/server/webapps/enportal/WEB-INF/config/custom.properties:

- hosts.redundant=true

- Create a full backup archive of your existing system. Ensure this backup has been configured to include all custom filesystem assets. Refer to the Backup & Recovery page for more information. It's recommended this archive also include all required JDBC database drivers, including the JDBC driver required for the external configuration database.

- Have two or more systems ready to be configured for clustering. Note it's possible to also use the existing system without having to re-install.

- Have the enPortal turnkey distribution and license files for each node.

Setup Process

The following applies to the primary node. Even in a purely load-balanced configuration pick one of the nodes as the primary for purposes of establishing the cluster:

- If converting the existing deployment into a cluster deployment, first shutdown enPortal.

- Otherwise, deploy the enPortal turnkey and ensure a valid environment. See the Installation documentation for more info.

- Configure enPortal to use an external configuration database. Follow the instructions to use dbsetup on the Configuration Database page, but do not restore any archives or perform a dbreset.

- Load the complete backup archive created in the initial preparation:

- portal Apply -jar archive.jar

- Install the license file if not already - remember this is node specific.

- Start enPortal. At this point you should have a working cluster of one node!

- Verify in the enPortal catalina.out log file the following lines:

- Establishing a connection to the external configuration database:

- Connecting to the DB_TYPE database at jdbc:mysql://HOST:PORT/DB_NAME...Connected.

- Redundancy mode is enabled:

- date@timestamp Redundancy support enabled

- Also verify you can log into this instance of enPortal and all content is as expected.

The following applies to all other nodes in the cluster:

- Deploy the enPortal turnkey and ensure a valid environment. See the Installation documentation for more info.

- Load the complete backup archive created in the initial preparation. Note this is different than for the primary node:

- portal FilesImport -skiplist apply -jar archive.jar

- Ensure database configuration is not loaded on enPortal startup by renaming the following files by adding .disabled to their filenames:

- [INSTALL_HOME]/server/webapps/enportal/WEB-INF/xmlroot/server/load_*.txt

- Configure enPortal to use an external configuration database. Follow the instructions to use dbsetup on the Configuration Database page, but do not load any archives or perform a dbreset.

- Install the license file if not already - remember this is node specific.

- Start enPortal. At this point you should now have a cluster of two or more nodes!

- Verify this instance the same as the primary instance.

Additional verification of the whole cluster can be performed by logging into the separate nodes from different locations and viewing the Session Management system administration page and verifying that all sessions are visible regardless of the node logged into.

Maintaining a Cluster

Product Updates

For full product updates a process similar to establishing a new cluster is required. A full archive should be performed. This should be tested in isolation with the new build to ensure there are no upgrade issues. If changes need to be made due to the upgrade then produce a new full archive on the new version of the product for use when establishing the cluster. As the entire cluster will need to be taken down, and re-established, this process will require some scheduled downtime to complete.

In high-availability environments if scheduled downtime is not possible then another approach would be to take down one node, re-establish a new cluster with a different external configuration database, then failover all clients to the new node. Other nodes can then be re-established pointing to the new configuration database, and then update the load balancer configuration to bring all nods back online.

For patch updates these are usually overlaid onto an existing install and do not modify configuration. This can be done in-place and typically just require the enPortal server to be restarted.

Content/Configuration Updates

Content and provisioning changes made directly on the cluster are immediately available to all cluster nodes and nothing further needs to be done.

For content and provisioning changes made in a separate development or staging environment then a full archive should be made and then deployed to production. This would essentially require a re-establishment of the cluster with the shortcut that enPortal is already installed, licensed, and the database configuration is in place.